2 Chinese students design a Sign Language Interpreter for their Aphasiac friends

Two girls and their team from Tsinghua University and the Beijing University of Aeronautics and Astronautics recently won First Prize for the Good Open Design Challenge with a niche product dedicated to aphasiacs. Their original intention of researching and developing such a product was to help their aphasiac friends.

Two girls and their team from Tsinghua University and the Beijing University of Aeronautics and Astronautics recently won First Prize for the Good Open Design Challenge with a niche product dedicated to aphasiacs. Their original intention of researching and developing such a product was to help their aphasiac friends.

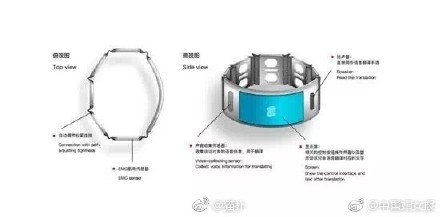

During a brief demonstration, the presenter reached out his hand with his thumb up. The characters meaning “hello” then immediately appeared on the display area of the APP they have called “SHOWING”. A black armlet around the arm of the presenter is used to catch the myoelectric signals of the hand movements, which look like a strap used in running.

SHOWING is a niche product designed specifically for aphasiacs and is estimated to be launched in late May. Probably for able-bodied persons, it is hard to grasp the significance of this product — Sign language is the bridge of communication among the aphasiacs. However, it is even more difficult than any foreign language for common people. And “SHOWING” is designed to be the unsung hero for such communication bridges.

Wang Nana, and Huang Shuang, the founders of SHOWING, and Huang Shuang, graduated from the Beijing University of Aeronautics and Astronautics in 2012, and were roommates in university. The girls first thought about working on aphasiacs related activities in 2015.

At one event, Wang Nana met Zhang Quan, an aphasiacs graduate from the Special Education College of Beijing Union University and they became good friends. As Wang Nana is unable to communicate with sign language, the two friends are more like e-pals in reality. Even when they’re face to face, they have to resorted to WeChat for communication.

After returning to the dormitory, Wang Nana shared Zhang Quan’s story with Huang Shuang. They then met more aphasiacs and experienced various difficulties in communication. “Sometimes I felt that I was ‘hurting’ them when I talked. Sometimes I would wonder whether I was getting carried away and ignoring their feelings”, Wang Nana said.

Huang Shuang, member of SHOWING team, presenting the results of their research at the event

Both kind-hearted girls believe that Zhang Quan is one of the luckier aphasiacs as she is able to study and work, as there are a large number of aphasiacs who are marginalized by society due to communication problems. They keep wondering if they will ever be accepted by others if they can’t hear their bosses during meetings or fail to communicate with waiters when they’re ordering food. CCTV live broadcasts provide simultaneous sign language interpretation, but what if these live broadcasts did not have any subtitles? The aphasiacs would never have a chance to enjoy it.

After searching via the Internet, the figures are rather shocking. The number of aphasiacs (including partially deaf people, cerebral apoplexy patients, cerebral palsy patients and ALS patients) in China could be as many as 70 million, which represents 5% of the total population and is quite a huge number.

They recall an image identification program they designed that won First Prize for the Feng Ru Cup (an academic and technological work competition open for BUAA students) and believe that this technology may help aphasiacs. Wang Nana recalls: “We feel that we’ve discovered a demand not noticed by others and we want to be the first to make use of it and put the values of our knowledge to good use.”

With this regard, they set about researching the feasibility of sign language interpretation. They started searching through literature, doing experiments and writing code. They also used the 50,000 RMB bonus they received for the First Prize of Feng Ru Cup as initial capital to start the program. Image identification was rejected as it is not always available; the gloves solution was also rejected as it’s too distracting. Finally, they decided to use armlets to capture myoelectric signals. With the gradual popularity of smartwatches, the armlet worn by aphasiacs will not make them look unusual in any way.

Repeated product iteration just for better protection of the aphasiacs. Huang Shuang remembers their activity of “Being An Aphasiac for One Day”. At a convenience store, to check the price, the aphasiacs had to communicate with the shop assistant with a mobile phone. If it took a little bit longer than usual, the people waiting in the queue would start to get a little impatient. “They would probably understand the situation more if they knew I cannot talk. However, if they are not aware, they will complain, and the more they complain, the more upset it makes me.” Some aphasiacs are unwilling to be called “deaf people” and they would rather be known as “dragon people” (in Chinese, “deaf” and “dragon” have the same pronunciation).

With the research and development orientation determined, the key is to continuously enter and debug the data to ensure the accuracy of the sign language interpretation. To check the reliability of the model, in the beginning, they were the only ones working on the sign language data entry. So they are making movements again and again with the armlet. Huang Shuang jokes: “We will develop Kylin arms (metaphor, describing that the arm of a lady is strong instead of slim) if we continue practicing like that!”

“When you are by their side to help them in real situations, they will appreciate your kindness and are quite cooperative.” Wang Nana says. To guarantee data diversification and have the aphasiacs really love the design, they contacted the Technical College for the Deaf at the Tianjin University of Technology and cooperated with the College on data acquisition and smart armlet development. They also expressed an intent to cooperate with the Disabled Persons’ Federation in Beijing Chaoyang District to provide consultation and guidance on SHOWING’s product and services. And Zhang Quan was invited as a consultant of the team.

SHOWING currently covers 200 sign language movements. To guarantee precision, each movement has been recorded 1,000 times. After creating their own gesture database, the team also established a 7-layer BP neural network (a type of multilayer feed-forward network trained by an error back-propagation algorithm) to train the data speed. The current identification accuracy is up to 95%.

Up until last August, the team only had two members — Wang Nana and Huang Shuang, after which other members started to join. They currently don’t have an office – they hold their meetings in whatever space they can find, such as cafés, restaurants, and classrooms, plus all the research and development work is done in their own laboratory. However, according to Wang Nana, they have never thought about giving up. Some members have quit the team to study overseas, and the two girls are both still studying themselves. However, they will continue to work on their product and are inviting new talents to join their team for product research and development.

Technology-based start-up teams led by girl students are not common, and they know have hands-on experience of the “breaking-in period”. At the beginning, whenever they have opposite ideas, Huang Shuang would wait until they both calm down and make further communication in the dormitory, and in team communication, one should concern oneself with facts and not with individual opinion and should be open-minded. There are also other technical personnel in the team, and when they stay up late to write code together, both girls will act as “Programmer Motivator” to give them a boost.

The two girls, born in 1994 and 1995 respectively, smile and say: “we haven’t fully stepped into society yet and have no burdens of buying an apartment or other pressures from society. We just think that as long as our technology can bring values to society and help some people in a real sense, and we have the time to spare, why not just do it!”

They have many blueprints for the future. For example, the SHOWING app may realize functions such as face-to-face chat and long duration dialogue. In the future, a closed loop consisting of sign language teaching, sign language letters and a smart armlet may be launched to solve various problems such as the lack of sign language teachers. In addition, such AI technologies, if matured, may also be applied to other fields, for example, instructing young piano learners to play the piano more accurately.

Recently, at the Good Open Design Challenge co-organized by Baidu and NUDP, the SHOWING team was awarded the First Prize and Popular Award, and the program received the “Most Inclusive Program” award.

The SHOWING Team at the Awarding Ceremony

*English translation from the following source : http://weibo.com/ttarticle/p/show?id=2309351000774105484176123532